David Vuong, Emma Tosaya, Jane Heathcote, Kai Garcia

If you have ever played an online game, of any variety, chances are you have run into a toxic player or two. Online gaming has a long, deep rooted history of toxicity, often attributed to many games’ violent or competitive natures. However, toxicity can stem from a variety of sources, from racism to sexism to even a player’s enjoyment of toxic environments. This article aims to find the link between toxic nature and the online first-person shooter (FPS) Valorant. From the moment it was announced, Valorant was one of the most anticipated game releases of 2020. With its release coinciding with the COVID-19 quarantine, its popularity received a drastic boost, giving it a uniquely diverse player base – including a rising number of female FPS players. Focusing specifically on female-received toxicity, randomly selected interactions between players will be analyzed based on word choice and context to study in-game triggers for toxicity.

Introduction

For those unfamiliar with the game, Valorant is a team based tactical shooter game, played online in teams of five. Each player is assigned a rank based on skill level (which ranges from lowest at Iron 1 to highest at Radiant) as displayed in Figure 1, and an account level (which indicates how much an individual has played the game on a particular account) as displayed in Figure 2.

The small team sizes and hierarchical structure create easy situations to call out fellow players, and the fast-paced and competitive gameplay leads to frequent incidents of toxic behavior. Performance based toxicity is made even easier thanks to the format of in-game statistics (see Fig. 3). Players have access to both their own and the opposing team’s statistics for a particular game. Each individual player’s username is displayed next to an image of the agent they have chosen and rank, followed by KDA count (kills, deaths, and assists to kills), loadout indicating what kind of weapon they are using, and credits showing each player’s current money. All of this information is necessary for knowledgeable gameplay but makes it easy to target a specific player – such as the person with the least kills.

Riot, the developer of Valorant, has made several attempts to quash performance-based rank toxicity. Visible ranks were removed in game, meaning the rank information found next to the agent image in Figure 3 were removed. Despite these attempts, there has been no real success or documented decrease in rank toxicity. A recent survey created by the Anti-Defamation League found that 89% of young gamers between the ages of 13 and 17 had experienced a disruptive, negative encounter in Valorant during the past six months (ADL, 2021). Toxicity in Valorant has become an expected part of the game, despite the fact that such “deviance” in games has the consequence of driving off new players and supports a reputation of toxicity (Shores et al., 2014).

In this study, we wanted to dig deeper into the linguistic phenomenon involved in this toxicity and explore how a player’s word choice and lexicon give insight into why they might feed into such a toxic environment. Analyzing the context and frequency of these toxic statements is important to better understand the triggers of toxicity in a community that is intended to promote teamwork. By looking at audio recordings and chat transcripts from a variety of sources, we predicted that gameplay-based comments would be the most common form of toxicity, but that other categories such as gender or race would have a key role in players’ decisions to make these comments.

Methods

There were two parts to our study: interaction analysis and community familiarity.

Part 1: Interaction Analysis:

Using a variety of open-source mediums, we collected random clips of toxic in-game encounters on YouTube, Twitch, and TikTok. Twitch is a platform in which people can stream their gameplay live to a virtual audience, and TikTok is a platform in which users can submit small one-minute clips or compilations. Using Twitch’s Valorant category, TikTok’s search function utilizing hashtags, and requesting clips from anonymous individuals, we analyzed 10 randomly selected clips of female Valorant players. As the demographic of Valorant players is overwhelmingly male, and considering sexism was one of our categories, we decided to focus specifically on female directed toxicity to avoid any skews in data. We looked for two instances of female-received toxicity in a single clip, which we called the primary and secondary interactions. The primary interaction was considered the first identifiable toxicity aimed at the female player after she had spoken, and the secondary was considered to be the second instance of toxicity. After creating transcripts for these interactions, we placed them into one of four categories based on the toxic word choice employed: sexism, profanity, performance, or racism. For example, an interaction where a male teammate referred to the female gamer’s bad play as a “woman moment” was placed in the “sexism” category. The primary and secondary interaction could be either the same or different forms of toxicity, and some even contained multiple types of toxicity in a singular interaction (ex. performance-based primary, sexist secondary). In the instances where there was more than one category documented, all categories noted were considered when compiling the final data.

Part 2: Community Familiarity

We also wanted to see how familiar the toxic Valorant jargon was to people outside the community of practice, as well as their thoughts and impressions on the nature of the negative interaction. To do this, we created a familiarity survey to send to several non-Valorant players. The survey consisted of one of our interaction transcripts and analysis questions. A total of 5 participants were shown the transcript – 4 female and 1 non-binary. We asked the participants to describe the nature of the interaction and to elaborate on the potential thought processes of both the female and male gamers.

The questions were kept as general as possible, and both the questions and transcript were sent via text so as to not incur any bias towards a specific category of toxicity. The first question asked was simply, “How would you describe this interaction?”. The following two questions focused on what the participants thought each of the player’s take on the interaction was, essentially asking if the participant viewed the interactions in the same way that our data collection did: sexist, racist, performance based, or straight profanity-based toxicity.

Analysis

Please note that the following video and data does include offensive language.

The video found below contains a clip from our randomly selected subjects who experienced toxicity in game.

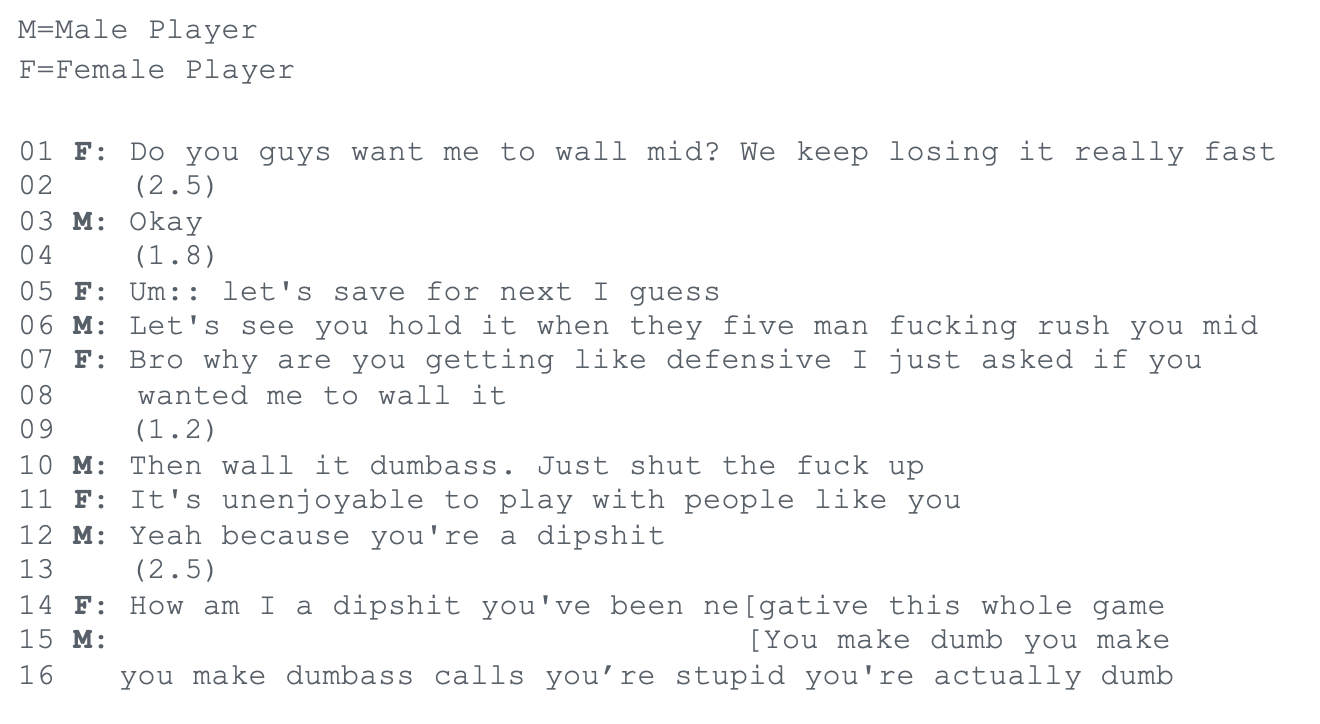

The continuing excerpt shows an example of how the project categorized terminology and phrases to collect data on types of toxicity. It is also the specific excerpt provided to subjects outside of the Valorant community for familiarity testing.

The excerpt opens with a question from the female player, targeted to her fellow teammates and related to the general game strategy. The primary toxic interaction occurs in Line 6, when the male player questions the female player’s ability to perform a game-related task (hold a site against the enemy team). The game terminology within this line flags it as performance-based toxicity according to our categorization methods. After the female player calls out his toxicity (Lines 7-8), there is a brief pause (1.2 seconds, Line 9) before he insults her and her abilities again in Line 10. This is the secondary interaction and is another example of a performance-based toxic comment.

Although the interaction continues past this point, we focused on the primary and secondary interaction, since we are only interested in the beginning trigger to the overall toxic encounter. Using the same methods as demonstrated above, we analyzed the other randomly gathered video clips and accumulated our final results.

Results

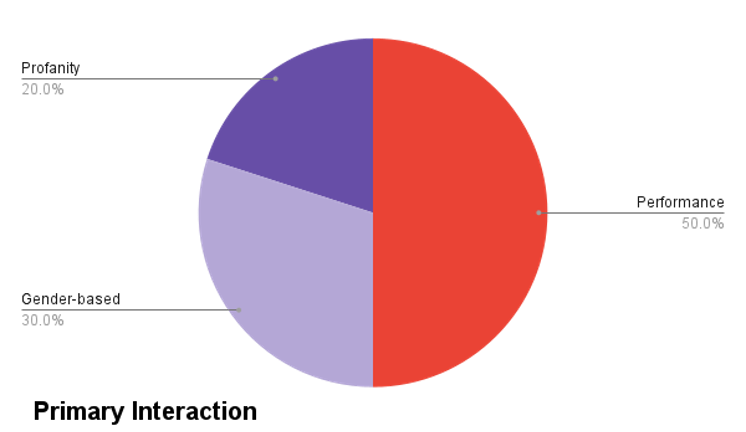

Overall, the most frequent type of toxicity encountered at the beginning of a negative interaction was performance-based insults, which aligns with our hypothesis. Figure 4 indicates that this performance toxicity appeared first in half of the videos we analyzed, followed by gender-based discrimination at 30% and general profanity directed towards the female player found in the remaining 20%.

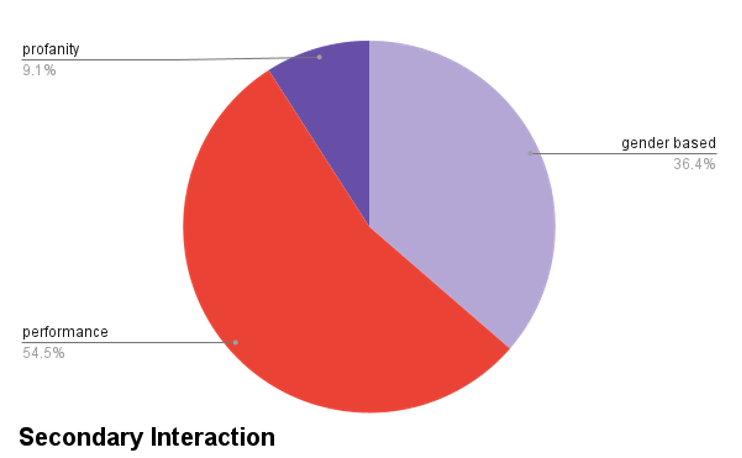

Performance was even more relevant in the secondary interaction, making up 54.5% of the comments, as seen in Figure 5. Once again, gender-based toxicity and general profanity made up the rest of the encounters studied, although both categories combined make up less than half of the interactions recorded.

No encounters of racist toxicity were documented as the immediate trigger for negative encounters, even though racism remains a known issue in Valorant. We did find instances of spoken racial slurs in the videos analyzed; however, as they were not the first or second instance of toxic comments, they were not included in our analysis.

After sending the example transcript to individuals outside of the Valorant community, we were able to analyze their answers and determine if they also regarded comments as negative. As demonstrated in Figure 6, all five individuals described the interaction using words with negative connotations. When asked about the potential thought processes behind the male player’s triggered toxicity, the most common themes were gender-based stereotypes and frustration/defensiveness over game performance. This aligns with the results of our data analysis and demonstrates that individuals outside the community view game toxicity as negative.

Discussion and Conclusions

As stated above in the results section, most of the toxic comments were classified as performance-based toxicity. However, contrary to the initial hypothesis, the trigger for these particular cases of toxicity cannot simply be defined by their lexical counterparts. In the end, most of the clips collected were of toxic players who were underperforming themselves, while the receiver of toxicity was outperforming the toxic player. This suggested that the trigger for toxicity was not simply related to low performance, but other factors beyond gameplay that were playing a key role in toxic behavior. Additionally, when the comments were transcribed as part of our survey questionnaire, the participants analyzing the conversations stated that the interaction was extremely negative and had misogynistic undertones. Not only was the toxicity understandable to persons outside of the community of speech, but the responses also suggest that the survey participants believed the toxicity triggers extended beyond surface level word choice. With that in mind, we can view these comments not only as an indication of performance toxicity, but rather a demonstration of sexism as the underlying trigger for in-game toxicity.

As also noted in the results above, this project did not yield any data on racism. Despite this fact, further studies could focus on racial discrimination as a trigger to toxicity within Valorant. As there is no way to determine a player’s race in Valorant except from their voice, a different data collection method would need to be employed to study racism in-game. Possible future studies could have subjects who speak African American Vernacular English or subjects with non-standard American accents volunteer clips of their toxic interactions, instead of having a female only subject demographic. Previous research (Buyukozturk, 2016) has found that one of the major contributors to racial gaming toxicity is the obscured nature of online interaction. Toxic gamers can draw stereotype-laden conclusions about a player based solely on their voice, and they may express their toxicity more readily than they would in real life because they feel safe hidden behind a digital avatar. It would be interesting to analyze the word choice and context of racist gaming encounters and compare them to documented examples of in-person racist speech.

For other future analysis, a much larger data sample would be needed to back the current findings. A larger data set would provide not only more conclusive results but would help expand the scope of toxic behaviors beyond our focused categories (sexism, profanity, performance and racism). As Valorant is still a relatively newer game (less than 2 years old), there is much more research that could be done to help explain toxic trends. The broader implications of these findings could extend to other phenomena in society as well, such as the reasons behind toxicity towards female colleagues in male dominated work environments. Studying the triggers of this deviance has the potential to increase awareness towards harassment and general toxicity prevalent in all aspects of life.

References

Anti-Defamation League. (2021, September 15). Most U.S. Teens Experienced Harassment When Gaming Online, ADL Survey Finds. https://www.adl.org/news/press-releases/most-us-teens-experienced-harassment-when-gaming-online-adl-survey-finds

Beres, N.A., Frommel, J., Reid, E., Mandryk, R.L., & Klarkowski, M. (2021). Don’t You Know That You’re Toxic: Normalization of Toxicity in Online Gaming. Proceedings of the 2021 CHI conference on Human Factors in Computing Systems.

Buyukozturk, B. (2016). Race, Gender, and Deviance in Xbox Live: Theoretical Perspectives from the Virtual Margins. Sociology of Race and Ethnicity, 2(3), 387–398. https://doi.org/10.1177/2332649216645529

Cook C. L. (2019). Between a troll and a hard place: the demand framework’s answer to one of gaming’s biggest problems. Media Commun. 7 176–185. 10.17645/mac.v7i4.2347

Kowert R. (2020). Dark Participation in Games. Frontiers in psychology, 11, 598947. https://doi.org/10.3389/fpsyg.2020.598947

Shores, K., He, Y., Swanenburg, K.L., Kraut, R.E., & Riedl, J. (2014). The identification of deviance and its impact on retention in a multiplayer game. Proceedings of the 17th ACM conference on Computer supported cooperative work & social computing. https://dl.acm.org/doi/10.1145/2531602.2531724