Kissan Desai, Elizabeth Reza, Aaron Zarrabi

Within today’s society artificial intelligence has reached levels that were once deemed unimaginable, from simple computer programming to being able to perform tasks such as mimicking human emotional body language. However, the question at hand around artificial intelligence is: to what extent can artificial intelligence “accurately” express human EBL? We answered this question through our own research on UCLA undergraduate juniors and seniors. We first asked participants to fill out a survey to gather their demographic information, followed by a zoom interview for the experimental portion. Each participant was displayed with twelve images (6 AI and 6 humans) depicting EBL. Through our examinations, we discovered that AI does have the ability to accurately mimic specific human bodily emotions; however, humans are better able to identify emotions when expressed by other humans rather than by AI. We discovered that when it came to ethnicity, culture, and gender, participants had split opinions on its effect on their overall responses, as only some believed it played a role in their ability to correctly identify the EBL of humans and AI. Our research can help technology continue to evolve, possibly to a point where society can no longer distinguish the differences between AI and humans.

Figure 1: The Dangers of Misinterpreting Body Language! (A scene in which nonverbal communication is completely misinterpreted, showing the consequences of the inability to understand.)

Introduction and Background

In order to communicate with others, humans utilize modes of both verbal and nonverbal communication. One mode of nonverbal communication is the use of emotional body language (EBL). In our research, we defined EBL as physical behaviors, mannerisms, and facial expressions–whether purposeful or subconscious–that are perceived and treated as meaningful gestures relaying emotional significance to the onlooker (de Gelder, 2006). The progressive development of artificial intelligence allows AI to mimic human behaviors (Embgen et al., 2012), as well as assess human individuals’ communication, including EBL, as seen in use of AI in job interviews (Nordmark, 2020). These combined factors lead us to question, to what extent can current AI “accurately” express human EBL?

Our target population for this research was college students, as they may potentially work alongside AI in their future workplaces. Our sample population was made up of UCLA juniors and seniors. The “accuracy” of AI’s EBL was based on the ability of our participants to identify AI expressing the emotions of anger, disgust, fear, happiness, sadness, and surprise, as compared to their ability to identify the same EBL expressed by humans. Through our research, we aimed to answer the question: To what extent can university students understand the emotions expressed by artificial intelligence through its utilization of EBL to communicate nonverbally?

We hypothesized that our participants would be able to identify AI EBL, though not as well as they would be able to identify human EBL. An additional caveat to our hypothesis was our belief that differences in EBL interpretations between our participants would be due to cultural differences harbored by our participants, as that would be a main difference between them, given they would all be around the same age range and go to the same school.

Through our project, we aimed to analyze the differences in individuals’ interpretation of human and AI EBL to hopefully make correlations between how different groups interpret certain gestures, or if there is some universal EBL. To expand upon that, we were curious if AI would be able to capture EBL that is naturally seen in humans (Hertfordshire, 2012). We finally understand the importance of different cultural norms regarding the body and as such, were attentive to this fact when asking others to study EBL, not only viewing the “multimodality in human interactions,” but also understanding the effects different cultures have through the lens of both technology and traditions colliding with one another (Macfayden, 2023).

Methods

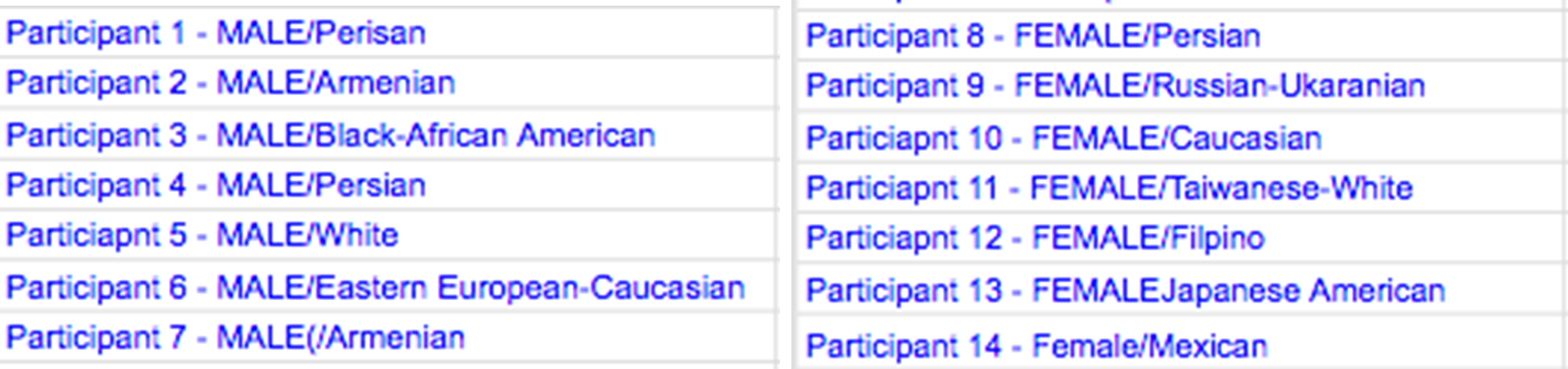

Our experiment utilized both qualitative research as well as thematic analysis, as we looked for common themes within human-to-human and human-to-technology interaction. As seen through previous experiments, such as Stephanie Embgen’s (Embgen et al., 2012), we understand that humans do have the capability of identifying emotions through AI EBL. Through our experiment, we surveyed and interviewed 14 UCLA juniors and seniors.

Each participant was asked to fill out a Google form, providing information on their demographic information.

Figure 2: Link to Google Form filled out by participants

https://docs.google.com/forms/d/e/1FAIpQLSf01ZG57zuMVyc99y3Ew1HtaRvEIxOj8lXhSUCt 7u4VdX-xhg/viewform?vc=0&c=0&w=1&flr=0

After the survey, we met with our participants via Zoom for an interview. Through the interview, our camera and audio were off while the participants’ were on. This precaution was in order to avoid any possible biases due to participants seeing our own body language or tonal changes in voice. We presented the participants with two sets of six images containing the emotions of anger, disgust, fear, happiness, sadness, and surprise. The images were sorted randomly, alternating between human and AI. The human images were created by us while the AI images were of Kobian, a robot created in the Japanese University of Waseda which is able to display numerous human emotions (Takanishi Laboratory, 2015).

After each image, our participants would identify the emotion, elaborating on why they chose that emotion, how they would express that emotion themselves, and then were informed which emotion was shown. At the end of the interview, we asked our participants whether they felt there were any discrepancies in their results due to their culture, ethnicity, or gender, something examined in the work of Miramar Damanhouri who asserts that the utilization of body language and other forms of non-verbal communication can lead to misinterpretation as different cultures/ethnicities have different rules and verbal cues (2018).

Figure 5: Link to Examine Interview Powerpoint and Process

https://docs.google.com/presentation/d/1ocxmTIu7mlOzKg7Y7jGVJn5i3BOIRVXSQpSu7iSWZ xQ/edit?usp=sharing

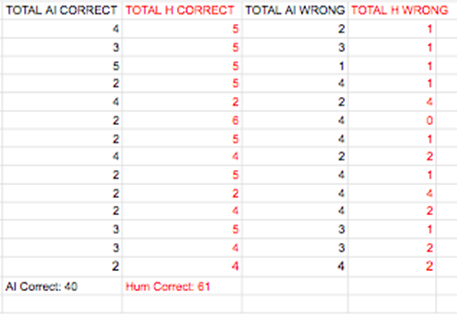

Through our results, we noticed which emotions participants had an ease or difficulty identifying. Participants had difficulties identifying the emotional expressions of Happiness (only 4 correct), Fear (only 1 correct), and Anger (only 5 correct) when expressed by AI. On the other hand, 14 participants were able to correctly identify Happiness and 10 were able to correctly identify Surprise when examining AI. When examining human EBL, participants had difficulty identifying surprise (5 correct) and had ease identifying happiness (13 correct), sadness (11 correct), disgust (11 correct), and anger (10 correct). As a whole though, participants took more time to identify AI EBL, even those they got correct, over human EBL, some of which they identified instantly.

We hypothesized that differences in EBL interpretations would be, in part, due to the cultural, ethnic, and gender differences harbored by our participants. When examining our participants, we discovered that many felt that their ethnicity, gender, or culture hadn’t played a part in their responses, with 6 expressing that it had, 2 expressing maybe, and 7 expressing no. For example, one of our participants explained how the individuals within their respective culture don’t show much emotion, they are very straight faced, and this affected their responses.

Overall, our results showed that humans are able to identify human emotions better than AI; however, it’s important to note that within the experiment, humans were able to identify certain AI emotions over their human counterparts, showing that there is at least some level of shared understanding between humans and AI; this is something that society continues to advance in an attempt to mimic human emotions.

Figure 8: Link to Results https://docs.google.com/spreadsheets/d/1TuvKT66sn2laSej50YjwplEUTSAX-DYyMMHUxYrV DMA/edit?usp=sharing

Discussion and Conclusions

In conversations with our participants, we found there were certain human tendencies in EBL that our participants found lacking in the AI representations, such as the lack of smile lines around eyes or flushed cheeks, both of which participants found to be an integral part of EBL that they use to identify certain emotions. These characteristics were ones that AI was unable to mimic in the images we used. We also found discrepancies in how our participants viewed EBL based on how they personally expressed the emotion. These included difficulty identifying the human “surprise” example and difficulty identifying the AI “fear” example. A question we would ask the participants is if they felt that they display the emotion in the same way as the image, and when shown images that they had difficulty identifying, a majority shared that they did not express the emotion in the same way. A factor that may have contributed to these difficulties is the fact that the human images were made by us, as the researchers, based on our own individual perceptions of the EBL. Not all EBL is universal, and so differences in this vein could have affected those answers. In addition, the split results in regard to how much our participants’ race, ethnicity, and gender affected how accurately they were able to identify the EBL displayed was too close to formally draw any conclusions from, and so our hypothesis that the discrepancies between their responses and the correct answers would be due to these factors cannot at this time be proven or disproven. Further research is needed to analyze that question more thoroughly.

Overall, these findings can help to fine tune AI imitations of body language as AI continues to develop, as it has not quite mastered human EBL yet. Our findings also emphasize the way both differences and uniformity in expressing oneself through body language work to create meaning through gestures that can be understood (or misunderstood) by others that express themselves in similar/different ways.

References

Damanhouri, M. (2018). The advantages and disadvantages of body language in Intercultural communication. Khazar Journal of Humanities And Social Sciences, 21(1), 68–82. https://doi.org/10.5782/2223-2621.2018.21.1.68

de Gelder, Beatrice. “Towards the Neurobiology of Emotional Body Language.” Nature Reviews Neuroscience, vol. 7, no. 3, Mar. 2006, pp. 242–49. www.nature.com, https://doi.org/10.1038/nrn1872.

Embgen, S., Luber, M., Becker-Asano, C., Ragni, M., Evers, V., & Arras, K. O. (2012). Robot-Specific Social Cues in Emotional Body Language. 2012 IEEE RO-MAN: The 21st IEEE International Symposium On Robot and Human Interactive Communication, 1019–1025. https://doi.org/10.1109/ROMAN.2012.6343883.

Hertfordshire, A. B. U. of, Beck, A., Hertfordshire, U. of, Portsmouth, B. S. U. of, Stevens, B., Portsmouth, U. of, Kim A. Bard University of Portsmouth, Bard, K. A., Hertfordshire, L.U. of, Cañamero, L., & Metrics, O. M. V. A. (2012, March 1). Emotional body language displayed by artificial agents. ACM Transactions on Interactive Intelligent Systems. Retrieved January 27, 2023, from https://dl.acm.org/doi/abs/10.1145/2133366.2133368

Macfayden, Leah, Virtual ethnicity: The new digitization of place, body, language, and … (n.d.). Retrieved January 27, 2023, from https://open.library.ubc.ca/media/download/pdf/52387/1.0058425/

Nordmark, V. (2020, March 16). What are ai interviews? Hubert. https://www.hubert.ai/insights/what-are-ai-interviews#%3A~%3Atext%3DAI%20assessment%20filters%20the%20applications%2Cof%20behavior%20and%20team%20fit

Takanishi Laboratory. (2015, June 24). Emotion Expression Biped Humanoid Robot KOBIAN-RIII. Takanishi.mech.waseda.ac.jp. Retrieved February 21, 2023, from http://www.takanishi.mech.waseda.ac.jp/top/research/kobian/KOBIAN-R/index.htm

YouTube. (2015, November 5). Nonverbal communication- gestures. YouTube. Retrieved February 14, 2023, from https://www.youtube.com/watch?v=0cIo0PkBs2c